Jim Crocker

25th October, 2025

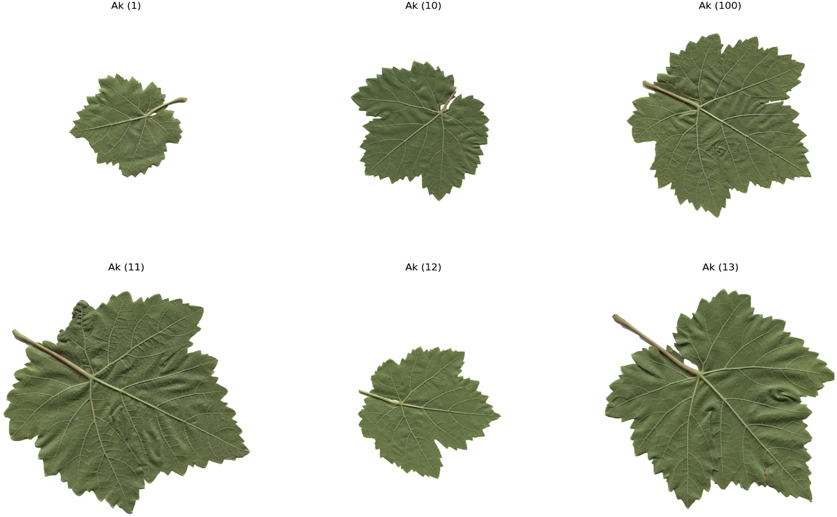

A sample of leaves from the ‘Ak’ grape variety, showcasing the distinct shape and vein patterns that differentiate it from other varieties.

Key Findings

- This study, conducted on five grape leaf varieties, aimed to improve classification accuracy using deep learning

- Fine-tuning a Densenet201 neural network model, by adjusting layers like BatchNormalization and Dropout, significantly boosted classification performance compared to other models.

- Optimizing the Adam optimizer and extracting relevant image features further enhanced the model’s ability to accurately identify grape leaf varieties

The study began by creating a detailed dataset of images representing five different grape leaf varieties. To ensure the model could accurately identify leaves under various conditions, the dataset was expanded through a process called data augmentation. This involved creating slightly altered versions of the existing images – rotating them, shifting their position, and making other subtle changes. This technique helps the model learn to recognize leaves regardless of minor variations in lighting, angle, or orientation.

The core of the approach involved using a Densenet201 model. DenseNets are a type of convolutional neural network (CNN) known for their efficiency and performance in image recognition tasks[2]. CNNs are inspired by the structure of the human visual cortex and are particularly good at identifying patterns in images. DenseNets achieve high performance by creating dense connections between layers – each layer receives input from all preceding layers. This allows the network to effectively reuse features and learn more complex representations of the data.

However, simply using a pre-existing Densenet201 model isn’t always optimal. The researchers focused on fine-tuning the model’s parameters to specifically suit the task of grape leaf classification. This involved several key adjustments. First, they incorporated BatchNormalization and GlobalAveragePooling2D layers. BatchNormalization helps to standardize the data fed into the network, improving training stability and speed. GlobalAveragePooling2D reduces the dimensionality of the feature maps, making the model more efficient and less prone to overfitting.

Overfitting occurs when a model learns the training data too well, and consequently performs poorly on new, unseen data. To combat this, the study optimized the parameters of a Dropout layer, a technique that randomly deactivates neurons during training, forcing the network to learn more robust features. They also experimented with different numbers of neurons and layers in the final Dense layer to find the optimal network structure.

Crucially, the researchers also tuned the Adam optimizer, an algorithm that adjusts the model’s weights during training to minimize errors. By carefully adjusting the parameters of Adam, they were able to achieve the best possible performance. Finally, they focused on extracting relevant image features to further enhance the model’s accuracy.

The results demonstrated that the optimized Densenet201 model significantly outperformed other commonly used CNN architectures like densenet121, densenet169, and resnet50. The improvement in both classification accuracy and generalization ability suggests that the fine-tuning process was highly effective. The study’s success highlights the importance of adapting deep learning models to the specific characteristics of the task at hand, rather than simply relying on pre-trained models.[2] provides a comprehensive overview of the DenseNet architecture and its various modifications, and the current study builds upon this foundation by demonstrating the benefits of targeted optimization for a specific application.

FruitsAgriculturePlant Science

References

Main Study

1) Research on grape leaf classification based on optimized densenet201 model

Published 21st October, 2025

https://doi.org/10.1371/journal.pone.0334877

Related Studies

Related Articles