This study is characterized as a meta-research, a recent study design that seeks to understand how scientific practice is crafted, interpreted, and capable of being produced globally [17]. All of this stems from a thorough analysis of the methodological foundations of other studies, a scenario where research examines its own research methods. As it is classified as a meta-research, in the present study it was not possible to apply writing checklists due to the absence of existing models in the literature.

The methodology of meta-research involves the systematic and critical analysis of scientific studies to evaluate and improve the quality, validity, transparency, and reproducibility of research [18]. This approach begins with the rigorous selection of existing studies using specific criteria and comprehensive databases. The selected studies are then methodologically evaluated using tools such as the Cochrane Risk of Bias Tool, PRISMA guidelines, and AMSTAR-2, which help identify and quantify potential biases, methodological flaws, and transparency gaps. The analysis also includes checking the availability of data and analysis codes, as well as adherence to practices like protocol pre-registration in PROSPERO, which are essential for ensuring the replicability of results [17, 19,20,21]. Beyond its role in identifying and correcting methodological deficiencies in scientific studies, meta-research has significant practical implications by guiding research policies and the development of guidelines aimed at optimizing the use of resources in scientific research [19, 22]. For this analysis, we included secondary studies with the research design of SRs which included Randomized Controlled Trials (RCTs) or Non-Randomized Studies (NRS) from the health literature. The current meta-research is registered on the Open Science Framework (OSF) with a registration protocol at https://doi.org/10.17605/OSF.IO/B298V. Only one modification regarding the terminology AMSTAR-2 was made to align with the correct nomenclature of the tool.

Four scientific tools were used to carry out the interpretation of results. The first of them is PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) checklist, published in 2009 with the aim of assisting authors in being transparent and accountable for their reviews, and it has been widely adopted. Recently updated in 2020 to align with advances in systematic review-related terminology and methodology, it now contains 27 great items (disregarding subitems) that describe important aspects for writing the abstract, introduction, methods, results, and discussion¹⁷. This allows for an in-depth understanding of the quality of the SRs’ written report by witnessing the essential items in their writing that are commonly mentioned and those that are absent. Another tool that contributes to ensuring the demonstration of effective interventions in SRs is the Template for Intervention Description and Replication (TIDieR) [23]. Created in 2013, this checklist contains 12 items to improve the quality reporting of interventions by facilitating their structure for authors, aiding reviewers and editors in evaluating descriptions, and making interventions clearer and more replicable for readers [24].

On the other hand, AMSTAR-2 (A Measurement Tool to Assess Systematic Reviews) stands out as a proficient instrument for evaluating the methodological quality of SRs of all study designs. It assesses 16 items, including critical and non-critical domains, judging the methodological quality of SRs based on the consistency and detail of the writing [25,26,27]. Lastly, the GRADE (Grading of Recommendations Assessment, Development and Evaluation) approach is a transparent and universal tool created by the ‘Grade Working Group,’ aiming to create evidence summaries from scientific studies to better guide recommendations for clinical practice [28, 29]. It involves classifying the strength of recommendations and especially assigning the quality of evidence (also known as the level of confidence in the effect estimates) for health study outcomes by a systematic and subjected judgment of several aspects of a SR conduction. Therefore, its use is essential for aiding in the interpretation of the quality of published evidence [30, 31].

PI-ECOT

The PI-ECOT strategy, used to define the study’s central research question, is represented by the following elements:

-

Population (P): Men and women living in low-middle income countries (LMICs);

-

Intervention-Exposure (I-E): Smoking, alcohol consumption, imbalanced diet, or physical inactivity;

-

Comparison (C): Absence of smoking, alcohol consumption, imbalanced diet, or physical inactivity;

-

Outcome (O): Primary outcomes – evaluate the quality of writing structure, methodological conduct, reporting mode of interventions or exposures and the certainty of confidence of SRs that investigated the association of Chronic Non-Communicable Diseases (NCDs) and behavioral risk factors in LMICs according every single item of PRISMA, AMSTAR-2, TIDieR and GRADE tools. Secondary outcomes – summarize possible described effects in SR that arose from each intervention for its specific risk factor analyzed in each study, such as economic impact, reduction in hospitalizations, or improvement in well-being, quality of life, education resilience, social, physical, and occupational functioning to better understand the scenario of intervention delivered.

-

Time (T): Between the years 2014 to 2024.

Inclusion and exclusion criteria

The following eligibility criteria for inclusion was: SRs addressing the association between at least one of their four most important modifiable behavioral risk factors (tobacco use, inadequate diet, alcohol consumption, and physical inactivity) and chronic NCDs in populations from low-middle income countries according to the ‘World Bank list of countries’ (2019) [32]. For this, different means of intervention (e.g., online, by phone, in person), by different professionals (e.g., multidisciplinary health team, teachers), and in different sectors (e.g., primary, secondary, and tertiary care, health education) were considered. The temporal cut-off encompassed studies published in the last seven years (January 1, 2014 – December 31, 2021), a timeframe sufficient to recognize the implementation of the GRADE (established in 2000), AMSTAR-2 (established in 2007), PRISMA (established in 2009), and TIDieR (established in 2013) tools, with the minimum cut-off year determined as 2014 (one year after the creation of the TIDieR tool).

Furthermore, there were no restrictions regarding the language of the article, utilizing digital translation strategies for any study accessed in a language other than Portuguese, English, or Spanish. Studies were excluded if they met at least one of the following criteria: (1) not being a SR; (2) the absence of lifestyle risk factors as intervention or exposure; (3) not including at least one NCDs as an outcome; (4) the population originating from high-income countries (HICs).

Electronic searches

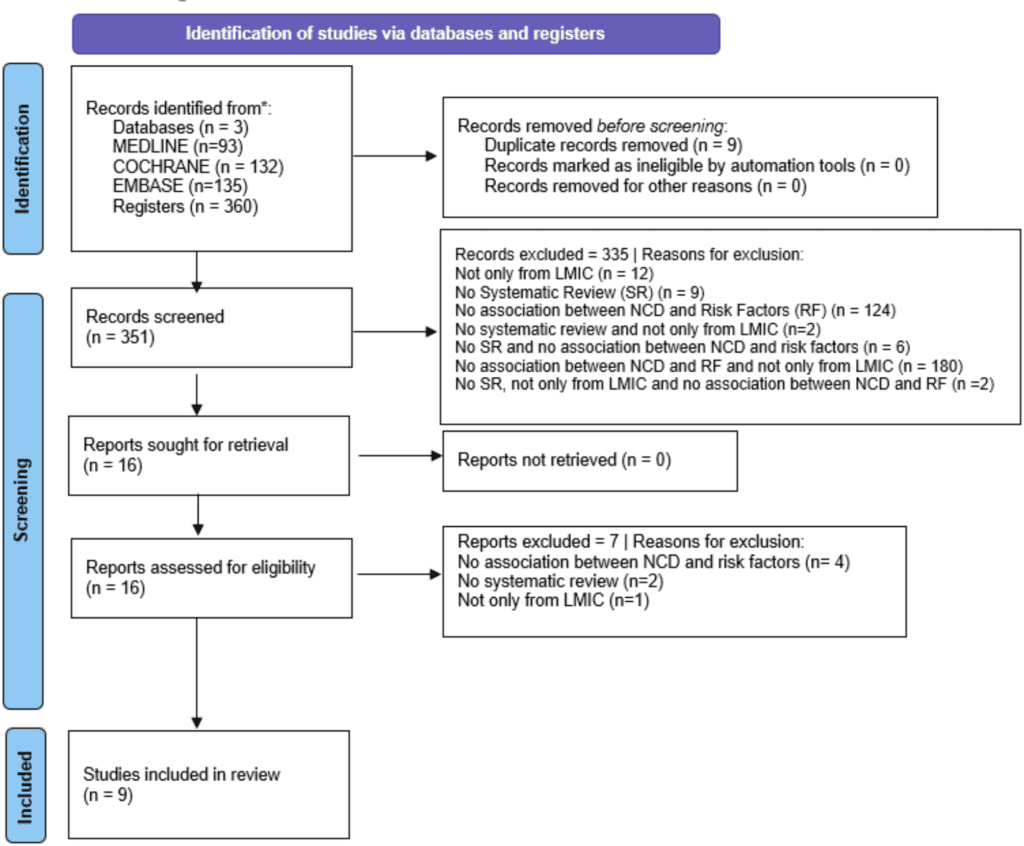

The databases used were MEDLINE (via PubMed), Embase (via Elsevier), Cochrane Library, as well as Grey Literature (http://www.greynet.org/opengreyrepository.html), with searches dated from January, 2014 to April 5, 2024. The search strategies used are present in Supplementary File 1 (Search Strategy), which was created separately due to the large number of terms employed and the magnitude of the compiled and applied search strategies. All of them were based on Medical Subject Headings (MeSH) terms related to the components of the PI-ECOT strategy. Manual searches were not conducted, as provided for by our protocol, and Grey Literature was carried out with the same search strategy for MEDLINE.

Study selection

The search was conducted by two independent reviewers (GDB and GFMVS). Upon obtaining all the studies, there was firstly the removal of duplicates. Then, the reviewers read the titles and abstracts and classified them as “yes,” (article meet inclusion criteria) “no,” (article does not meet inclusion criteria or does meet exclusion criteria) or “maybe” (article apparently meet inclusion criteria) in a blinded and independent manner using the free web-based platform Rayyan, designed for organizing articles to construct a SR. At the end of the selection process, the blinding of the platform was removed, and consensus was subsequently reached to resolve any discrepancies in the items assigned by each reviewer to the evaluated articles. Those finally classified as “no” were excluded, while those marked as “yes” or “maybe” were read in full and assessed against the inclusion and exclusion criteria after complete reading. An experienced reviewer (AJG) resolved any disagreements not resolved through consensus, providing the final verdict. A comprehensive list of reasons for study exclusions is available in a supplementary file.

Data extraction

All studies included were imported into the EndNote 20 software. A randomization process of the selected SR (calculated N) was carried out on the “Randomizer” website, generating a sequence of numbers to provide a completely random reading and data extraction order of the articles evaluated. The EndNote 20 references were then separated for full-text reading and application of the approaches.

Data extraction was carried out using PRISMA, AMSTAR-2, TIDieR, and GRADE tools in the included studies, integrally. All of these approaches have a high degree of scientific validation and are recommended for the integrated assessment of SRs [23, 25, 28, 33, 34], each one corresponding to a crucial domain for the construction of this research design.

Two reviewers (GFMVS and BHSC) independently extracted data, and for the identified primary outcomes, they applied checklists from the PRISMA (quality and bias in reporting systematic reviews), TIDieR (quality of reporting and bias in intervention descriptions in systematic reviews), and AMSTAR-2 (quality and bias in systematic review methodology) tools, respectively. On the other hand, one reviewer (GDB) also independently applied the GRADE tool (level of certainty and biases in the body of evidence).

Furthermore, an extraction data form was created by authors to assess the main data of included studies plotting the data in a Microsoft Excel spreadsheet for a transparent and objective analysis. The extraction sheet included the following domains:

-

(1)

Study details: objective, project details, country where the study was conducted, funding, details about the intervention delivery location (i.e., city or community), target condition or risk factor (e.g., subliminal symptoms, coexistence of deleterious lifestyle habits) and the article’s self-reported use of at least one SR quality assessment tool (i.e., PRISMA, TIDieR or GRADE).

-

(2)

Participants: sample size (intervention and control groups at baseline and follow-up), sociodemographic characteristics (e.g., age and gender).

-

(3)

Interventions/Exposures: description of the intervention/exposure including frequency and duration, number of sessions, format (e.g., one-on-one or group), intervention cost.

-

(4)

Delivery of the intervention/exposure: setting where the intervention/exposure took place (e.g., school, home, health clinic), who delivered the intervention (e.g., doctor, nurse, psychologist, teacher, lay health worker, etc.), and whether it was delivered by an individual practitioner or a team of individuals, if there was intersectoral collaboration (e.g., between health and education or child protective services).

-

(5)

Comparison Groups: characteristics and procedures for the selection of comparison groups (e.g., matching vs. randomization).

-

(6)

Results: effect obtained its size, direction, and confidence interval. When numerical data were not presented, the outcomes were summarized narratively.

For the analysis of writing, the PRISMA checklist, versions 2009 and 2020, was used since some articles were published before the release of the new PRISMA 2020 version.

The PRISMA 2009 statement consists of a 27-item checklist used to assist authors in providing a transparent report of SRs and meta-analyses. Of the 27 items, one assesses the reporting of the title, one assesses the abstract, two assess the introduction, twelve assess the methods, seven assess the results, three assess the discussion, and one assesses the reporting of funding. The article by Moher et al. [33], providing the explanation and elaboration of the PRISMA 2009 checklist, was consulted to assess whether each item was informed appropriately.

The PRISMA statement criteria’s can be accessed in Supplementary File 2.1 (Checklist 2009) and 2.2 (Checklist 2020). The reporting of every single item in the included studies was classified as: ‘Informed’ (I), ‘Partially Informed’ (PI), ‘Not Informed’ (NI), and ‘Not Applicable’ (NA). In PRISMA 2009, items 16 (‘Additional Analyses’), 21 (‘Synthesis of Results’), and 23 (‘Additional Analysis’) were classified as NA if the review involved a Meta-Analysis. Thus, in PRISMA 2020, items 13e (‘Synthesis methods’) and 20c (‘Results of syntheses’) were also considered NA in articles that did not plot a Meta-Analysis. Writing items informed in an appendix or properly referenced protocol were also evaluated.

The PRISMA 2020 statement is an update of the PRISMA 2009 statement. The PRISMA 2020 statement consists of a main checklist and an abstract checklist. The main checklist has 27 great items: one assesses the reporting of the title, one assesses the reporting of the abstract, two assess the introduction, eleven assess the methods, seven assess the results, one assesses the discussion, and four assess other information. The abstract checklist has 12 items and is actually a subdivision of the great item 2 of the main checklist. In total, the checklist has 53 topics.

Unlike the PRISMA 2009 statement, the PRISMA 2020 statement included some subdivisions items such as items 10 (‘Data items’), 13 (‘Synthesis methods’), 16 (‘Study selection ’), 20 (‘Results of syntheses’), 23 (‘Discussion ’), and 24 (‘Registration and protocol’). The article by Page et al. [34]., providing the explanation and elaboration of the PRISMA 2020 checklist, was consulted to assess whether each item was reported appropriately.

An overall point’s score of related items from the PRISMA checklist, created by simple and weighted average, were presented in bar charts to facilitate visualization as an additional analysis. The maximum Total PRISMA 2020 Score of an article that did not conduct meta-analysis and an article that conducted meta-analysis are respectively: 51 and 53 (quoting great items and sub-items). The maximum Total PRISMA 2009 Score of an article that did not conduct meta-analysis and an article that conducted meta-analysis are respectively: 24 and 27.

The results from the PRISMA 2009 and PRISMA 2020 statements were also synthesized in individual tables for both statements obtained from the PRISMA website. All tables for individual studies are available in Supplementary Files 3.1 and 3.2 (Individual Study Tables). Each item was classified as ‘Informed’, ‘Partially Informed’, ‘Not Informed’, or ‘Not Applicable’, with location of all information identified in the individual tables.

The AMSTAR-2 tool is used to assess the methodological quality of SRs, comprising 16 items, among which seven domains are critical, and nine are non-critical [25,26,27]. The quality of the review can be classified based on the fulfilment or non-fulfilment of these items as ‘Critically Low’, ‘Low’, ‘Moderate’, and ‘High’. ‘High’ was considered if no critical domain was identified. ‘Moderate’ if it fails in more than one non-critical domain. ‘Low’ if it fails in a critical domain (with or without failures in non-critical items). ‘Critically Low’ if more than one critical item is not fulfilled. The results of the articles’ assessment using this tool were presented through a table where the 16 items of the tool were evaluated as ‘Yes,’ ‘Partial Yes,’ ‘No,’ and ‘No meta-analysis conducted,’ with critical items marked with an ‘*’. Based on the fulfillment or non-fulfillment of these items, the articles were classified into ‘High’, ‘Moderate’, ‘Low’, or ‘Critically Low’ methodological quality.

The capable tool of ensuring the demonstration of interventions quality report was the Template for Intervention Description and Replication (TIDieR). The checklist contains 12 items, including the brief name of the intervention/exposure, why it was performed, what it is (materials and procedures involved), who performed it, how it was performed, where it was performed, when it was performed, the costs involved, any customizations, modifications, and the satisfaction level when employed [23, 24]. The results obtained by the TIDieR tool were classified as ‘Informed’ or ‘Not Informed’ based on whether the tool items were fulfilled or not [24]. At the end of the table, a column called ‘Number of Studies (Percent)’ was inserted with the absolute and percentage numbers of the corresponding items in each of the studies evaluated. The score of each article regarding the TIDieR items considered ‘Informed’ and those considered ‘Not Informed’ was also presented in bar charts for easier visualization. All tables for individual studies are available in Supplementary File 4.

The GRADE approach constitutes a well-structured and transparent approach with its central goal being to assign the quality of evidence for study outcomes [28]. This is achieved through a final classification into the domains ‘Very Low’, ‘Low’, ‘Moderate’, and ‘High’. This grading is based on a final consensus judgment involving the downgrading of five domains that decrease quality (Risk of Bias, Inconsistency, Indirectness, Imprecision, and Publication Bias) and three domains that increase quality (Large Effect Size, Dose Response Gradient, and Possible Confounding Factors) [29].

The results from GRADE, in addition to being represented in a synthesized table format, were also allocated in a Summary of Findings (SoF) – a table created in the free web-based application Guideline Development Tool and recommended by GRADE handbook [28] – to evaluate the certainty evidence into 5 domains: ‘Unmeasured’, ‘Very Low’, ‘Low’, ‘Moderate’, and ‘High’. The SoF table is part of the GRADE approach application routine. Its purpose is to facilitate the determination of outcome types and the certainty of evidence by making all assessment items for an outcome visible and tabulated. Each of these aspects was also presented with their respective effect measures as found in the assessment, including Percentage of Prevalence (%), Odds Ratio (OR), Mean Difference (MD), and Standardized Mean Difference (SMD). As no meta-analysis was performed in our study, no heterogeneity strategy was employed.

In the approach to recover possible missing data (i.e., methods, outcomes, supplementary material etc.) two emails (one for notification and another as a reminder) were programmed to be sent within a 7-day interval to the authors. However, there was no need to request missing data because no missing information was identified.