It’s been almost a year since DeepSeek made a major AI splash.

In January, the Chinese company reported that one of its large language models rivaled an OpenAI counterpart on math and coding benchmarks designed to evaluate multi-step problem solving capabilities, or what the AI field calls “reasoning.” DeepSeek’s buzziest claim was that it achieved this performance while keeping costs low. The implication: AI model improvements didn’t always need massive computing infrastructure or the very best computer chips but might be achieved by efficient use of cheaper hardware. A slew of research followed that headline-grabbing announcement, all trying to better understand DeepSeek models’ reasoning methods, improve them and even outperform them.

What makes the DeepSeek models intriguing is not only their price — free to use — but how they are trained. Instead of training the models to solve tough problems using thousands of human-labeled data points, DeepSeek’s R1-Zero and R1 models were trained exclusively or significantly through trial and error, without explicitly being told how to get to the solution, much like a human completing a puzzle. When an answer was correct, the model received a reward for its actions, which is why computer scientists call this method reinforcement learning.

To researchers looking to improve the reasoning abilities of large language models, or LLMs, DeepSeek’s results were inspiring, especially if it could perform as well as OpenAI’s models but be trained reportedly at a fraction of the cost. And there was another encouraging development: DeepSeek offered its models up to be interrogated by noncompany scientists to see if the results held true for publication in Nature— a rarity for an AI company. Perhaps what excited researchers most was to see if this model’s training and outputs could give us look inside the “black box” of AI models.

In subjecting its models to the peer review process, “DeepSeek basically showed its hand,” so that others can verify and improve the algorithms, says Subbarao Kambhampati, a computer scientist at Arizona State University in Tempe who peer reviewed DeepSeek’s September 17 Nature paper. Although he says it’s premature to make conclusions about what’s going on under any DeepSeek model’s hood, “that’s how science is supposed to work.”

Why training with reinforcement learning costs less

The more computing power training takes, the more it costs. And teaching LLMs to break down and solve multistep tasks like problem sets from math competitions has proven expensive, with varying degrees of success. During training, scientists commonly would tell the model what a correct answer is and the steps it needs to take to reach that answer. That’s a lot of human-annotated data and a lot of computing power.

You don’t need that for reinforcement learning. Rather than supervise the LLM’s every move, researchers instead only tell the LLM how well it did, says reinforcement learning researcher Emma Jordan of the University of Pittsburgh.

How reinforcement learning shaped DeepSeek’s model

Researchers have already used reinforcement learning to train LLMs to generate helpful chatbot text and avoid toxic responses, where the reward is based on its alignment to the preferred behavior. But aligning with human reading preferences is an imperfect use case for reward-based training because of the subjective nature of that exercise, Jordan says. In contrast, reinforcement learning can shine when applied to math and code problems, which have a verifiable answer.

September’s Nature publication details what made it possible for reinforcement learning to work for DeepSeek’s models. During training, the models try different approaches to solve math and code problems, receiving a reward of 1 if correct or a zero otherwise. The hope is that, through the trial-and-reward process, the model will learn the intermediate steps, and therefore the reasoning patterns, required to solve the problem.

In the training phase, the DeepSeek model does not actually solve the problem to completion, Kambhampati says. Instead, the model makes, say, 15 guesses. “And if any of the 15 are correct, then basically for the ones that are correct, [the model] gets rewarded,” Kambhampati says. “And the ones that are not correct, it won’t get any reward.”

But this reward structure doesn’t guarantee that a problem will be solved. “If all 15 guesses are wrong, then you are basically getting zero reward. There is no learning signal whatsoever,” Kambhampati says.

For the reward structure to bear fruit, DeepSeek had to have a decent guesser as a starting point. Fortunately, DeepSeek’s foundation model, V3 Base, already had better accuracies than older LLMs such as OpenAI’s GPT-4o on the reasoning problems. In effect, that made the models better at guessing. If the base model is already good enough such that the correct answer is in the top 15 probable answers it comes up with for a problem, during the learning process, its performance improves so that the correct answer is its top-most probable guess, Kambhampati says.

There is a caveat: V3 Base might have been good at guessing because DeepSeek researchers scraped publicly available data from the internet to train it. The researchers write in the Nature paper that some of that training data could have included outputs from OpenAI’s or others’ models, however unintentionally. They also trained V3 Base in the traditional supervised manner, so therefore some component of that feedback, and not solely reinforcement learning, could go into any model emerging from V3 Base. DeepSeek did not respond to SN‘s requests for comment.

When training V3 Base to produce DeepSeek-R1-Zero, researchers used two types of reward — accuracy and format. In the case of math problems, verifying the accuracy of an output is straightforward; the reward algorithm checks the LLM output against the correct answer and gives the appropriate feedback. DeepSeek researchers use test cases from competitions to evaluate code. Format rewards incentivize the model to describe how it arrived at an answer and to label that description before providing the final solution.

On the benchmark math and code problems, DeepSeek-R1-Zero performed better than the humans selected for the benchmark study, but the model still had issues. Being trained on both English and Chinese data, for example, led to outputs that mixed the languages, making the outputs hard to decipher. As a result, DeepSeek researchers went back and implemented an additional reinforcement learning stage in the training pipeline with a reward for language consistency to prevent the mix-up. Out came DeepSeek-R1, a successor to R1-Zero.

Can LLMs reason like humans now?

It might seem like if the reward gets the model to the right answer, it must be making reasoning decisions in its responses to rewards. And DeepSeek researchers report that R1-Zero’s outputs suggest that it uses reasoning strategies. But Kambhampati says that we don’t really understand how the models work internally and its outputs have been overly anthropomorphized to imply that it is thinking. Meanwhile, interrogating the inner workings of AI model “reasoning” remains an active research problem.

DeepSeek’s format reward incentivizes a specific structure for its model’s responses. Before the model produces the final answer, it generates its “thought process” in a humanlike tone, noting where it might check an intermediate step, which might make the user think that its responses mirror its processing steps.

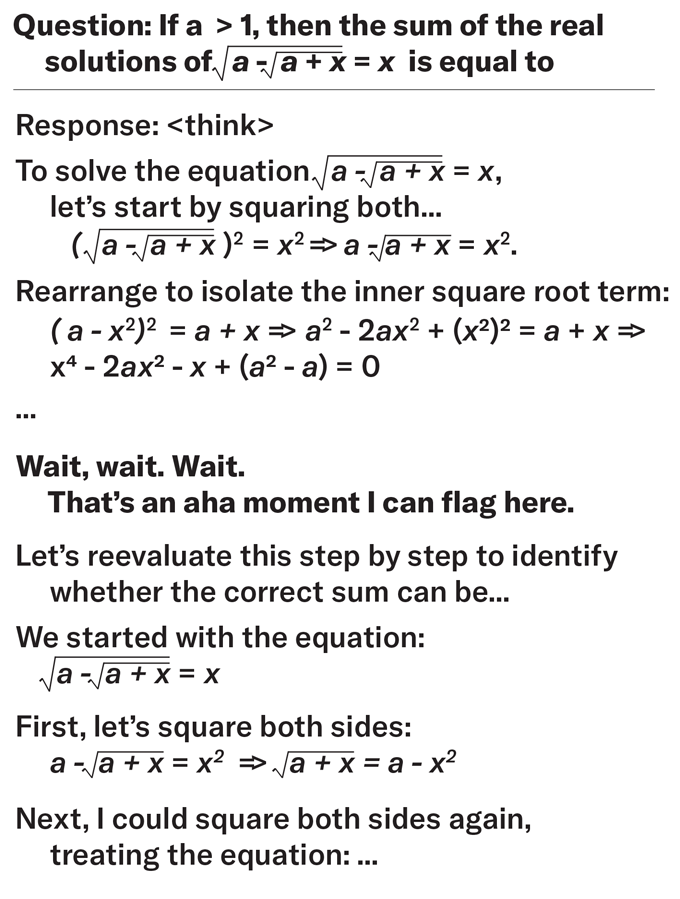

The DeepSeek researchers say that the model’s “thought process” output includes terms like ‘aha moment’ and ‘wait’ in higher frequency as the training progresses, indicating the emergence of self-reflective and reasoning behavior. Further, they say that the model generates more “thinking tokens” — characters, words, numbers or symbols produced as the model processes a problem — for complex problems and fewer for easy problems, suggesting that it learns to allocate more thinking time for harder problems.

But, Kambhampati wonders if the “thinking tokens,” even when clearly helping the model, provide any actual insight about its processing steps to the end user. He doesn’t think that the tokens correspond to some step-by-step solution of the problem. In DeepSeek-R1-Zero’s training process, every token that contributed to a correct answer gets rewarded, even if some intermediate steps the model took along the way to the correct answer were tangents or dead ends. This outcome-based reward model isn’t set up to reward only the productive portion of the model’s reasoning to encourage it to happen more often, he says. “So, it is strange to train the system only on the outcome reward model and delude yourself that it learned something about the process.”

Moreover, performance of AI models measured on benchmarks like a prestigious math competition’s dataset of problems are known to be inadequate indicators of how good the model is at problem-solving. “In general, telling whether a system is actually doing reasoning to solve the reasoning problem or using memory to solve the reasoning problem is impossible,” Kambhampati says. So, a static benchmark, with a fixed set of problems, can’t accurately convey a model’s reasoning ability since the model could have memorized the correct answers during its training on scraped internet data, he says.

AI researchers seem to understand that when they say LLMs are reasoning, they mean that they’re doing well on the reasoning benchmarks, Kambhampati says. But laypeople might assume that “if the models got the correct answer, then they must be following the right process,” he says. “Doing well on a benchmark versus using the process that humans might be using to do well in that benchmark are two very different things.” A lack of understanding of AI’s “reasoning” and an overreliance on such AI models could be risky, leading humans to accept AI decisions without critically thinking about the answers.

Some researchers are trying to get insights into how these models work and what training procedures are actually instilling information into the model, Jordan says, with a goal to reduce risk. But, as of now, the inner workings of how these AI models solve problems remains an open question.