Training topic model with Afaan Oromo health documents

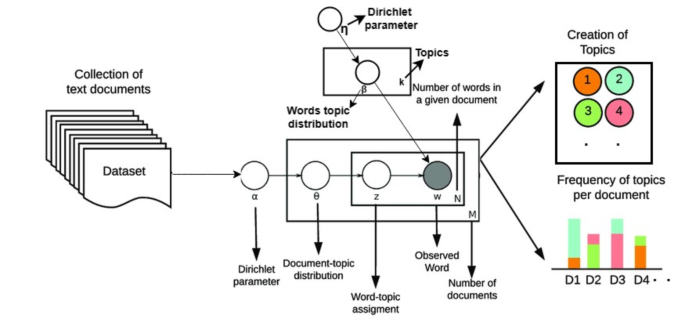

The aim of topic modeling is used to mine the unstructured documents’ main topics17. In our methodology, we used LDA, which is the generative probabilistic model and is a popular topic modeling technique, for discrete data sets such as text corpora23,30,41. The LDA will receipts the pre-processed texts as input. Then group the words inside the text documents based on most similar meanings42. The words in the topics are associated with conditional probability and representing a confidence degree, which means how much each word or terms is relevant to that cluster’s topics. The obtained groups of words signify the diverse topics in the document. The main difficulties of using LDA model is that it does not label the extracted topics as mentioned in35,43. To solve this issue, we manually label this group of words in the themes. In our work, we limit the top 10 words and 10 topics. When using human judges to interpret the topic model results by domain experts, the best effective technique is to carefully analyze the underlying keywords of the model output. Thus, the issue of unlabeled extracted topics is resolved. After labeling the acquired clusters of words, extract the most representative keywords of each topic and each documents. We initially, we extract a topic matrix vs. document. In this matrix, every term is associated with the probability of belonging to a specific topic.

The following summary of steps carried out during LDA model training steps.

-

1.

To implement the topic detection, processing of the topic keyword distribution, and the topic document distribution, the Gensim package multicore LDA models is used.

-

2.

The best number of topics we used by running the LDA model on our corpus is 10, and for each topic we used 10 keywords.

-

3.

The final step assigns the topics to each document using the model by finding the probability distribution of the sentence per generated topic assignment.

Finally, we obtain the topics of the documents and, for each topic, assigned the label along with the most illustrative words associated with those documents.

-

A.

Dominant topic extraction and output visualization.

Table 2 reports some topics that are extracted from the electronic health document. As observed from this experiment, every topic consists of 10 keywords associated to the topics. Each extracted word in each topic has a correspondent probability ratio. We used 10 most probable keywords for each topic. However, there are a few words that might have nonsense to those topics, but most of them are relevant to associated topics. Some of the topics are a little mixed, and meaning can be dissimilar, but most of the other extracted topics give sense, and they can be clustered as a category in the document. To this achieve this optimal topic and keyword selection, we relied on various model evaluation metrics, namely coherence values for topic interpretability assessment and perplexity scores for measuring model ability to predict data. The evaluation of these metrics was conducted iteratively by varying the number of topics and observing their impacts on model performance. This assortment procedure was guided by both quantitative metrics and qualitative assessments by the domain experts to ensure transparency and relevance. Each keyword is listed based on its probability distribution in descending order. The meanings of each keyword are provided in English in the context of medical concepts.

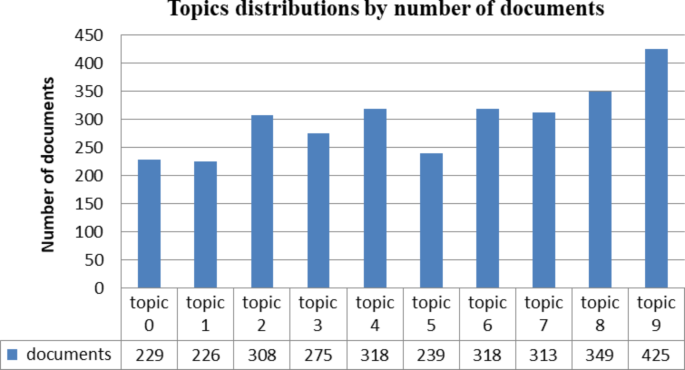

As we stated in the training topic modeling section, every text document in corpus will be assigned to one theme class based on a high probability contribution to the dominant topic. After training the LDA models, we present the distribution of the topics in the document for each sentence and the weight of the topic and the keywords in a pleasantly formatted output, and the sample results were as shown in Table 3. Figure 4 illustrates that document topic distribution weights for all documents in our corpus.

-

B.

Topic labeling.

In this experiment, topic modeling produces word distribution that gives meaningful topics similar to word topic probability distribution according to the chosen number of topics. Each word has a probability of each document and the relevance of one topic to the other. After the LDA model has been generated, a document can be determined based on the distribution of topics describing the document’s collection of terms. The LDA model uses a BOWs assumption, which means the order of words is ignored in the document. From our experimental result, the generated topics with important keywords are listed in Table 2. Each term under each topic or theme is assigned based on a weight, with the highest ranking words having greater weights.

Defining the core meaning of these extracted topics is crucial. However, the model does not tag topic labels automatically. The labels are made manually by carefully observing the grouped words in each topic, since LDA will only return the group of keywords known as topics. Despite the developments in statistical methods, the interpretability of the model output is not assured because of the difficulty in the language44. Therefore, when merging the word matrix of each topic, it is required to assign the artificial labels to correctly reflect the internal association and context of corresponding keywords. To label the extracted topics we involved three domain expert annotators. The labeling process was guided by balancing interpretability and coherence values to ensure accurate and meaningful labels. When disagreements arose among the annotators, particularly regarding ambiguous words such as homonyms or polysemy, we took the majority to resolve it. This procedure expected to maintain consistency and strengthen the interpretability of the extracted topics in the medical context. Based on the extracted topics was labeled and with their distribution results so that we could easily describe each topic. In Topic 0 most keywords are “dhagna”, “dhufee”, “gogsa”, “roqomsiisa”, “dugda”, “qorachiisu” are showing that this topic is about nervous related disease, the most common words in Topic 1 “ulfaa”, “hordoffi”, “laguu”, “dhiigatu”, “darbee”, “garmalee”, and “baayyata” are implying that the topic is about gynecology, Topic 2 is mental illness, Topic 3 is eye disease, Topic 4 is ear disease and disorders, Topic 5 is internal disease, Topic 6 is chronic disease, Topic 7 is skin disease, Topic 8 is orthopedic conditions, and Topic 9 is dental related disease are presented in Table 4.

-

C.

Topic model evaluation.

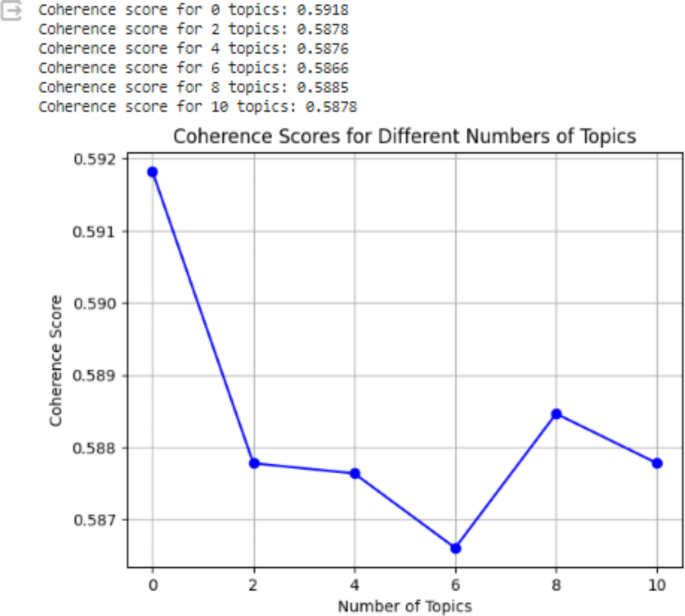

To evaluate the output of the model we used the two qualitative metrics the coherence value (Cv) score from topic coherence framework and the quantitative method perplexity39,40.

Perplexity.

It is a standard quantitative method to evaluate topic models. It is termed as how successful our model predicts the sample word probability over the documents based on term occurrences in the topics. Perplexity45 is defined as algebraically corresponding to the inverse of the geometric mean per word probability and calculated for the corpus D test by computing the natural exponent of the mean of log-likelihood of the corpus of words, as presented in Eq. 1. In topic modeling, the lower perplexity value shows the better generalization ability.

$$\:\text{P}\text{e}\text{r}\text{p}\text{l}\text{e}\text{x}\text{i}\text{t}\text{y}\left(\text{D}test\right)=exp(-\frac{{\sum\:}_{d=1}^{m}\:log\:p\left(wd\right)}{{\sum\:}_{d=1}^{m}\:Nd}\:\:)$$

(1)

Where, Dtest is the test dataset, M is the number of textual documents in the test datasets, wd represents the words in document d, and p(w, d) is the likelihood of the document d. The perplexity of our LDA model at topic 10 based on the experiment is −6.403. We used perplexity to evaluate the model result for guessing the number of topic k for the model assessment of our dataset since the model assumed the K parameters are mandatory. Calculating log perplexity yields a negative value due to the logarithm of a number.

Coherence value.

In topic modeling, the coherence value is the score measure of semantic likeness between each word in the topics extracted17. When topics generated are semantically interpretable, topic coherence scores are high. Several measures based on the word co-occurrence score of the most significant terms for each distinct topic have been applied in the topic extraction literature. The best practice to determine how a topic is interpretable is to evaluate the coherence of the topic. The human topic ranking is the gold standard for assessing coherence, but it is pricy. In the current experiment, we implement a particular case of topic coherence using Eq. 2.

$$\:Cv=\frac{2}{N\left(N-1\right)}{\sum\:}_{i=1}^{N-1}\:{\sum\:}_{j=i+1}^{N}sim\left(\text{w}i,\text{w}j\right)\:$$

(2)

Where, N is the total number of terms in the top-N list for the given topic, sim(wi, wj) is the Jaccard similarity between the sets of documents containing words wi and wj.

In our experiment, topic modeling is employed for topics 2, 4, 6, 8, 10, and the coherence score is nearly the same for each generated topic as observed, ranging from 0.5918 to 0.5878. In this work, extracted topics are well interpretable at topic 10 by considering coherence values and human expert judgment. The interpretability of the topics in our methodologies coherence values is illustrated in Fig. 5.

In this work, we assess our model results based on interpretability quality criteria through human inspection and topic coherence metrics. In human judgment, the decisions of each resulting topic are manually recorded to articulate whether the topic provides a meaning or not.

Now, in order to mine further specific medical information, the technique is needed to advance retrieval performance. Finding the relevant document within this collection of documents in response to the user query is known as information retrieval46. As the information retrieval wants to address ambiguous knowledge, the exact processing techniques are not appropriate47. The probabilistic models are more suitable for these techniques. Within this model, relations are provided with probability weights corresponding to the significance of the document. This weight reflects different ranks of relevance. The results of the current IR schemes are regularly sorted lists of the documents where the top outcomes probabilities are more expected to be relevant with the search index according to the systems. In some methods, the users can judge the returned results and tell the methods which ones are applicable for them. The method then resorts to the result set. Documents that have many of the arguments present in them are ranked as having a higher probability value48. These relevance response processes are known to greatly advance IR performance. As a search key from the corpus, we can use document ID, document name, or document keywords. In our experiment as search queries, we use document identity numbers to retrieve respected topic information through the LDA model. As a consequence, the model tries to retrieve relevant topic information from a query entered by the user. As per the LDA model assumptions, every document is a mixture of topics. The model can also be able to visualize this topic mix and dominant topics in each document. For instance, when the user provides document ID 1018 to the developed model, which contains “gurra keessa waraana, ciniina” are displayed as the topic weight distributions. This means the model finds information about the given document ID from our corpus. Topic mix for sample documents is as presented in Table 5.

Document classification analysis and evaluation

As we mentioned in the above dominant topic extraction section, each document was allocated to one category based on the highest topic distribution weight. This is important when we execute topic models on the same dataset again and again to find the best k number of topics. We trained the LDA model to extract the topics; then after, we required to go further with the health document classification in Afaan Oromo with the model. We have gone through document categorization experiments according to the topics. Once a topic classifier is trained using the LDA models, we need to test the topic distribution in the documents.

We have randomly selected 600 (20%) of documents as a test set from the total datasets. After performing the experiment, we manually labeled this test document with a specific label name for every document. We compared the manually labeled test documents with the results of LDA-labeled documents. We used common metrics, namely accuracy and F1 score. Then, we computed the effectiveness of the LDA model output in order to realize how the model applied is good enough for the rest of the whole dataset. Although our models classify most of the problem statements accurately and give the correct class labels for the test documents, we also analyzed a few examples where our model could not give accurate results due to a few constraints of our dataset.

In the context of topic modeling and NLP, the main challenge of polysemy (words with multiple meanings) and homonymy (words that are spelled the same but have different meanings) can significantly impact the interpretability and accuracy of the model. For instance, from our dataset, the word “Dhukkuba” can mean “disease” or “pain”. In a medical-related document, it could refer to a specific illness, general pain, or symptoms, depending on its context. The word “Nyaata” can mean “food” (noun) or “eats” (verb). The context of usage will determine whether it refers to the food itself or to the act of eating. This can make it difficult for the model to clearly distinguish between the topics. However, our model shows promise results when we evaluate our test documents. From this experiment, we obtained 79.17% accuracy and 79.66% F1 score for the LDA model results that we tested with the similar training set. These metrics are promising, especially in the context of unsupervised topic modeling where such performance levels are often considered robust. In this framework, these scores show the model’s ability to generate coherent topics that align well with the underlying structure of the data.

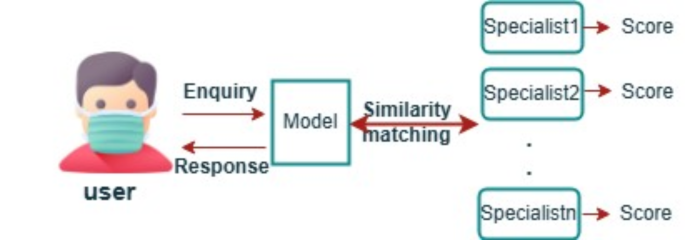

Medical specialist recommendation to patients

In this work, we present a recommender method that helps the users find solutions for their health conditions. Individuals spend more time and need various efforts to search doctors and medical departments according to the specialties provided. For this task, we have successfully created the corpus, the topics, and the documents associated with specific labels. The proposed method provides efficient medical specialists recommendations to fill this gab. The model takes an input inquiry from the user. This taken input inquiry is considered, and it gets sent into our model to the most satisfying output as the medical specialists and from that we generate and give out the most probable suggestions that can be used by the patient based on the most similar weight. The model will choose the highly predictive similar documents for personalized recommendation to the target users. According to our integrated systems, it recommends the documents with the highest predicted score value to the users, as presented in Fig. 6. The model result is deemed accurate if the patient’s consultation query falls within the disease class in which the suggested medical professionals are highly skilled.

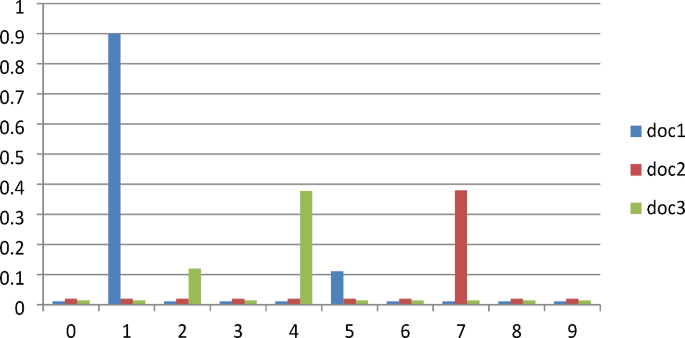

Finally, we use our model to make predictions of the suitable medical specialists for new documents or patent queries provided by the users. In this experiment, the output of the LDA results, the topics and topic probabilities associated with trained documents, would result in labeled documents that would finally be useful to classify unseen documents. Figure 7 illustrates the document-word distribution weights for the ten topic or specialists departments. Each document under each topic is assigned a weight, with the higher ranking documents having greater weights. Based on the higher ranking, the model will recommend the highest topic name for each inserted document, as presented in Table 6. The users can also view the model generated response for the provided unseen documents.

Graphical representations of documents topic distribution of unseen data using our model (Note: Nervous disease = 0, Gynecology = 1, Mental illness = 2, Eye disease = 3, Ear disease and disorders = 4, Internal disease = 5, Chronic disease = 6, Skin disease = 7, Orthopedic conditions = 8, Dental disease = 9).